Source: www.delltechnologies.com

MLPerf is an industry consortium established for Machine Learning (ML)/Artificial Intelligence (AI) solution benchmarking and best practices. MLPerf benchmarks enable fair comparison of the training and inference performance of ML/AL hardware, software and algorithms/models.

MLPerf Training measures how fast a system can train machine learning models. This includes image classification, lightweight and heavy-weight object detection, language translation, natural language processing, recommendation and reinforcement learning, each with specific datasets, quality targets and reference implementation models. You can read about the latest Dell MLPerf training performance in my previous blog.

The MLPerf Inference suite measures how quickly a trained neural network can evaluate new data and perform forecasting or classification for a wide range of applications. MLPerf Inference includes image classification, object detection and machine translation with specific models, datasets, quality, server latency and multi-stream latency constraints. MLPerf validated and published results for MLPerf Inference v0.7 on October 21, 2020. While all training happens in the data center, Inference is more geographically dispersed. To ensure coverage of all cases, MLPerf is further categorized into a datacenter and edge division.

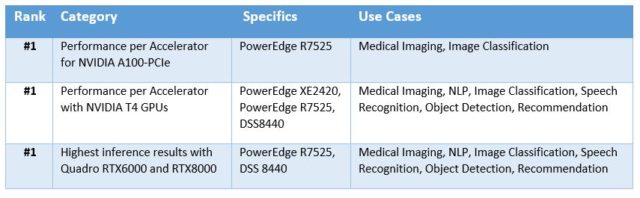

Dell EMC had a total of 210 submissions for MLPerf Inference v0.7 in the data center category using various Dell EMC platforms and accelerators from leading vendors. We achieved impressive results when compared to other submissions in the same class of platforms. A few of the highlights is are captured below.

Dell EMC portfolio meets customers’ inference needs from on-premises to the edge

- Dell EMC is the only vendor with submissions on a variety of inference solutions – leveraging GPU, FPGA and CPUs in the datacenter/private cloud and Edge.

- Dell is also the only vendor to submit results on virtualized infrastructure with vCPUs and NVIDIA virtual GPUs (vGPU) on VMware vSphere. Datacenter operators can leverage virtualization benefits of cloning, vMotion, distributed resource scheduling, suspend/resume of VMs with NVIDIA vGPU technology for their accelerated AI workloads.

- The breadth of solutions and performance leadership across multiple categories make it crystal clear that we enable our customers to be on the fast track for their inference needs from on-premises datacenter to edge AI infrastructure.

We’re proud of what we’ve done, but it’s still all about helping customers adopt AI. By sharing our expertise and providing guidance on infrastructure for AI, Dell EMC helps customers become successful and get their use case into production. Performance details and additional information are available in our technical blog. Learn more about PowerEdge servers here.

¹ These are derived from the submitted results in MLPerf datacenter closed category. Ranking and claims are based on Dell analysis of published MLPerf data. Per accelerator is calculated by dividing the primary metric of total performance by the number of accelerators reported.